Java HotSpot(TM) 64-Bit Server VM (build 25.261-b12, mixed mode) Java(TM) SE Runtime Environment (build 1.8.0_261-b12) * PySpark is installed at c:\users\divyansh jain\anaconda3\envs\dbconnect\lib\site-packages\pyspark While connecting I’m getting below error.

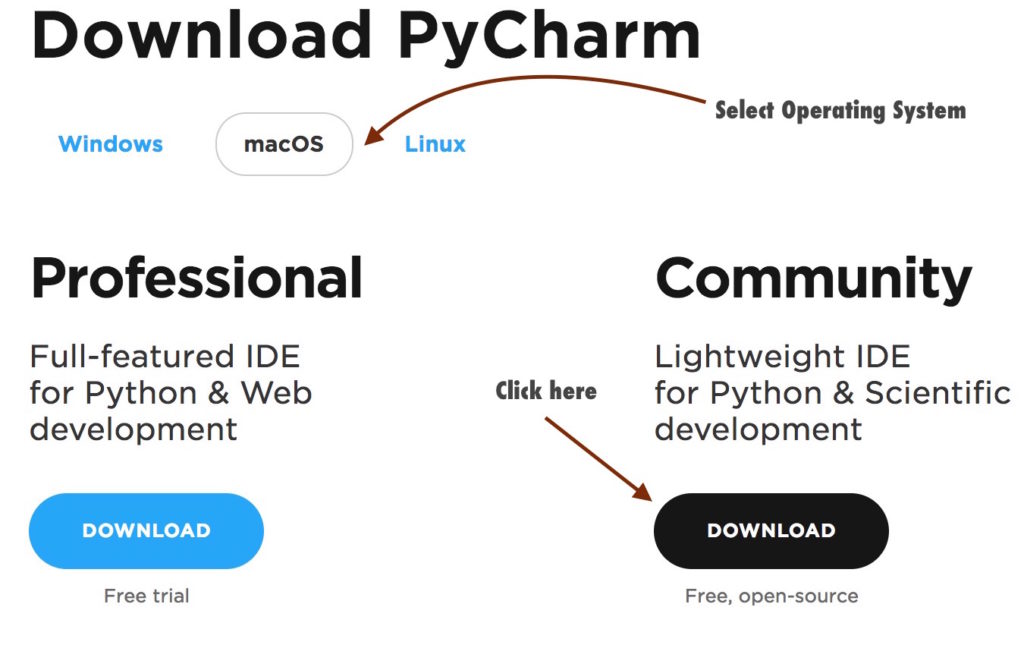

#Pycharm ide install#

#Pycharm ide windows#

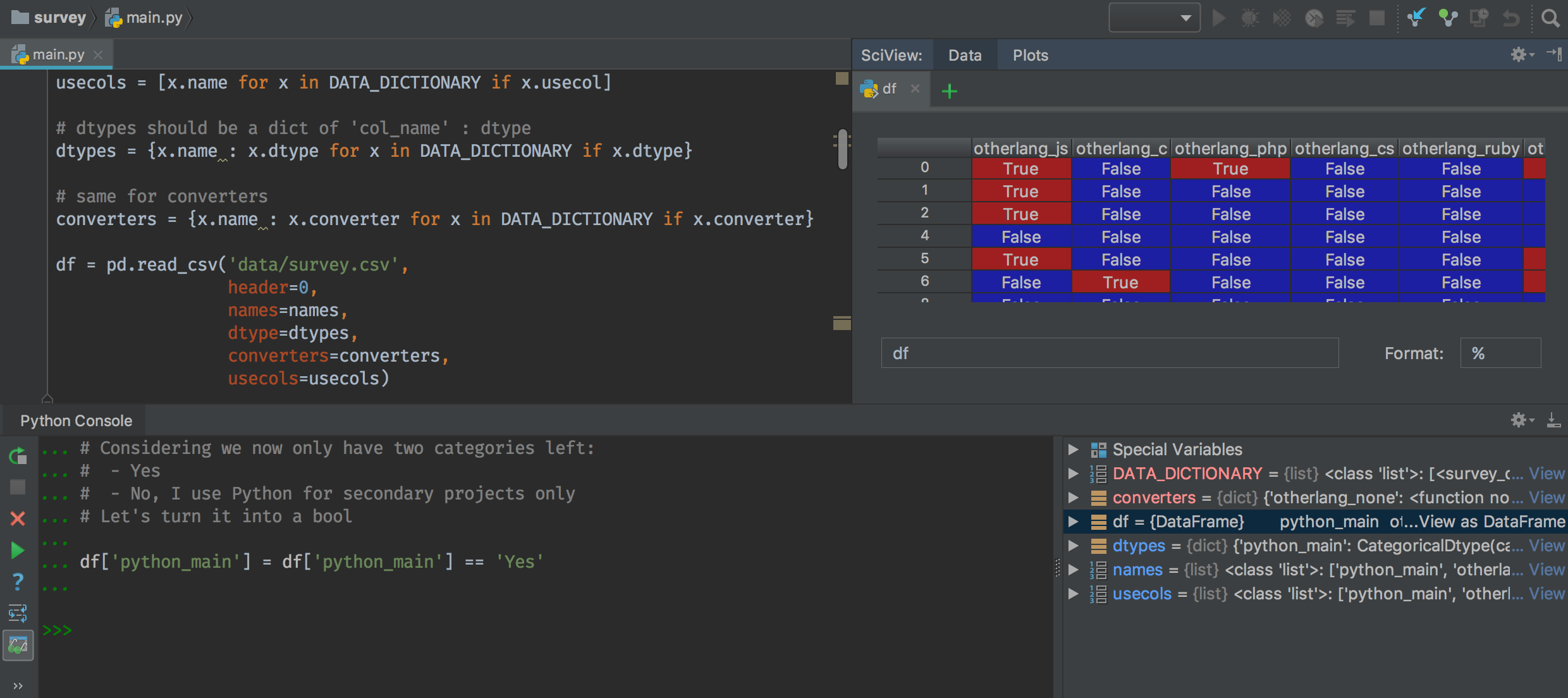

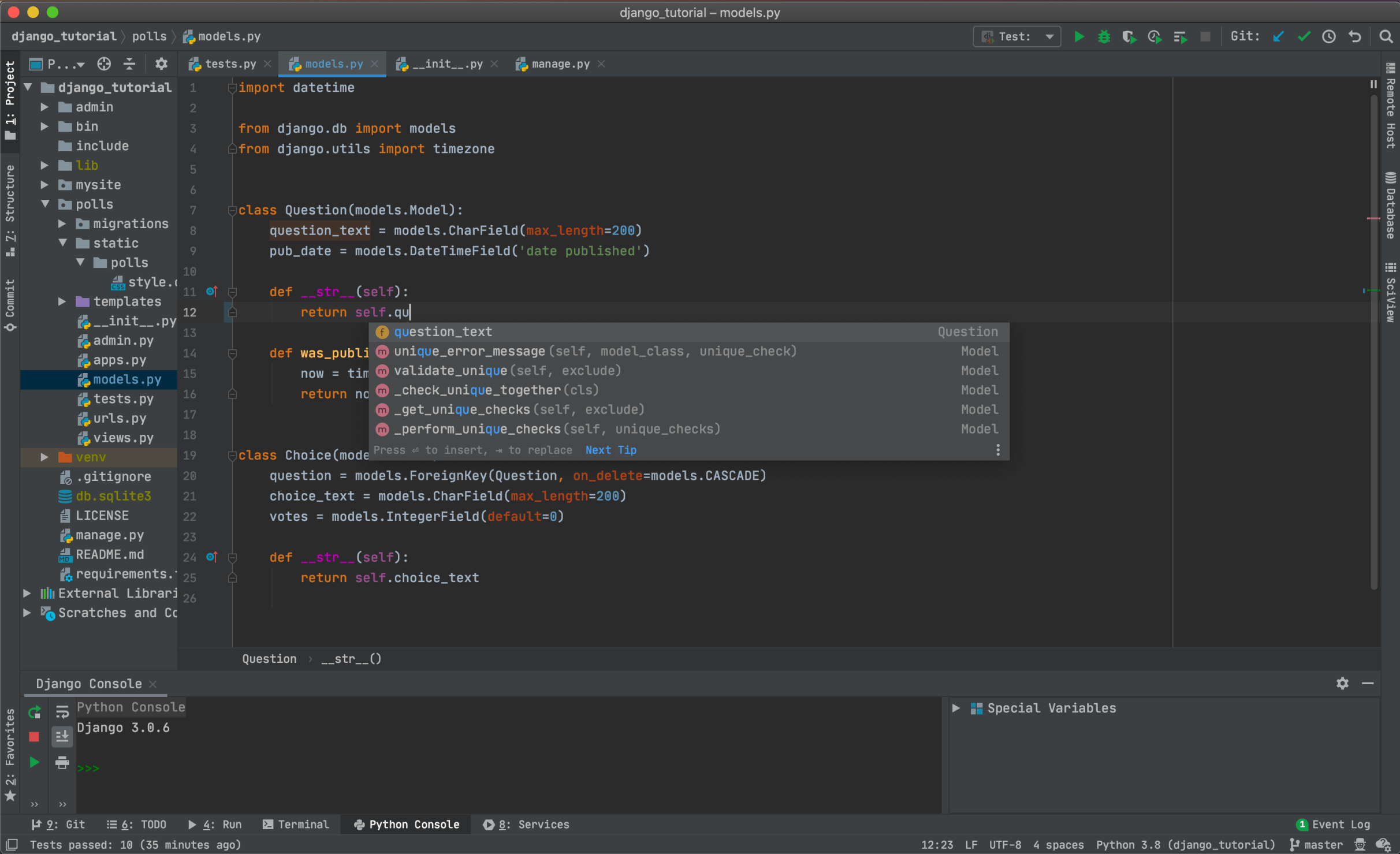

Python Spark commands that work from an Azureĭatabricks Notebook attached to the cluster should work from your IDE if you Test by creating new python file in your project.After clicking box next to existing interpreterĭrop down, configure to use your dbconnect conda environment.Interpreter section and choose existing interpreter.

Set project name then expand the Project.pip uninstall pyspark (if new environment this will have no effect).Now return to the Anaconda prompt and run:.We also need to get a few properties from the cluster page.Copy and save the token that is generated.Settings by clicking person icon in the top right corner From Azure Databricks Workspace, go to User.To connect with Databricks Connect we need to.Cluster will need to have these two items added in the Advanced Options -> Spark Config section (requires edit and restart of cluster):.We need to launch our Azure Databricks workspace and have Next, we will configure Databricks Connect so we can runĬode in P圜harm and have it sent to our cluster. Keep this prompt open as we will return to itĭatabricks Connect – Install and Configure.After install completes, launch Anaconda prompt.Choose to add conda to path to simplify future.Install for all users to default C:\ProgramData location.Install Miniconda to have access to the conda package and environment manager: Not be the right choice for your other projects. Required to match the version used by our Azure Databricks Runtime, which may

One key reason is that our Python version is

#Pycharm ide code#

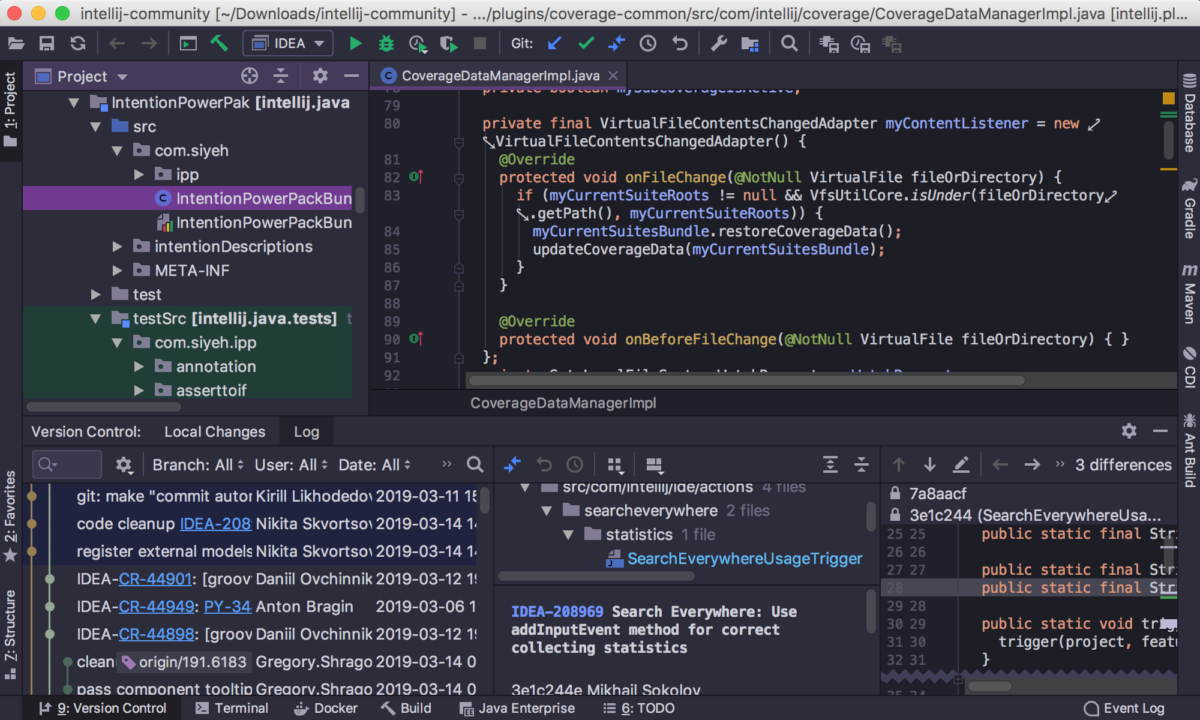

Getting to a streamlined process ofĭeveloping in P圜harm and submitting the code to a Spark cluster for testingĬan be a challenge and I have been searching for better options for years. Use P圜harm or another IDE (Integrated Development Environment). Team of people that will go through many versions, many developers will prefer to But, when developing a large project with a

Notebooks are useful for many things andĪzure Databricks even lets you schedule them as jobs. If you have tried out tutorials forĭatabricks you likely created a notebook, pasted some Spark code from theĮxample, and the example ran across a Spark cluster as if it were magic. Other Azure components such as Azure Data Lake Storage and Azure SQL Database. That make deploying and maintaining a cluster easier, including integration to Power of Spark’s distributed data processing capabilities with many features Azure Databricks is a powerful platform for data pipelines

0 kommentar(er)

0 kommentar(er)